By the end of this module, you’ll gain experience in putting machine learning services to work for you.

Module videos:

- Overview of machine learning [3:47]

- Machine learning overview for cloud apps (1/3) [16:17]

- Machine learning overview for cloud apps (2/3) [12:49]

- Machine learning overview for cloud apps (3/3) [16:46]

- Speech to Text API Demo [11:42]

- Speech to Text / Vertex AI Qwiklabs [14:33]

- (Soon deprecated!) AI Platform Demo (1/2) [14:53]

- (Soon deprecated!) AI Platform Demo (2/2) [13:55]

- Vertex AI Chatbot demo [12:27]

- AI Labs [14:34]

Machine Learning

Just as a precursor/forewarning: this is not an in-depth guide to machine learning (ML) or artificial intelligence! We’re going to use the tools available to us in Google Cloud, but for a deep dive into ML theory you’ll probably want to take a whole class or post-graduate program on it.

Enough preamble, on we go!

Machine Learning Basics

- Module video: Machine learning overview for cloud apps (1/3) [16:17]

- Module video: Machine learning overview for cloud apps (2/3) [12:49]

- Module video: Machine learning overview for cloud apps (3/3) [16:46]

This is my favorite image related to machine learning, bar none (c/o CloudSight):

To you, clearly they are all muffins. (joking). But a machine learning algorithm might have a hard time telling the difference! What about a traffic light covered in snow? Or the side of a semi-truck that an algorithm recognizes as sky (see: Tesla disasters).

Let’s start with an overview of machine learning [3:47]

And here, is a quote from Google on their (current) favorite definition of what ML actually is:

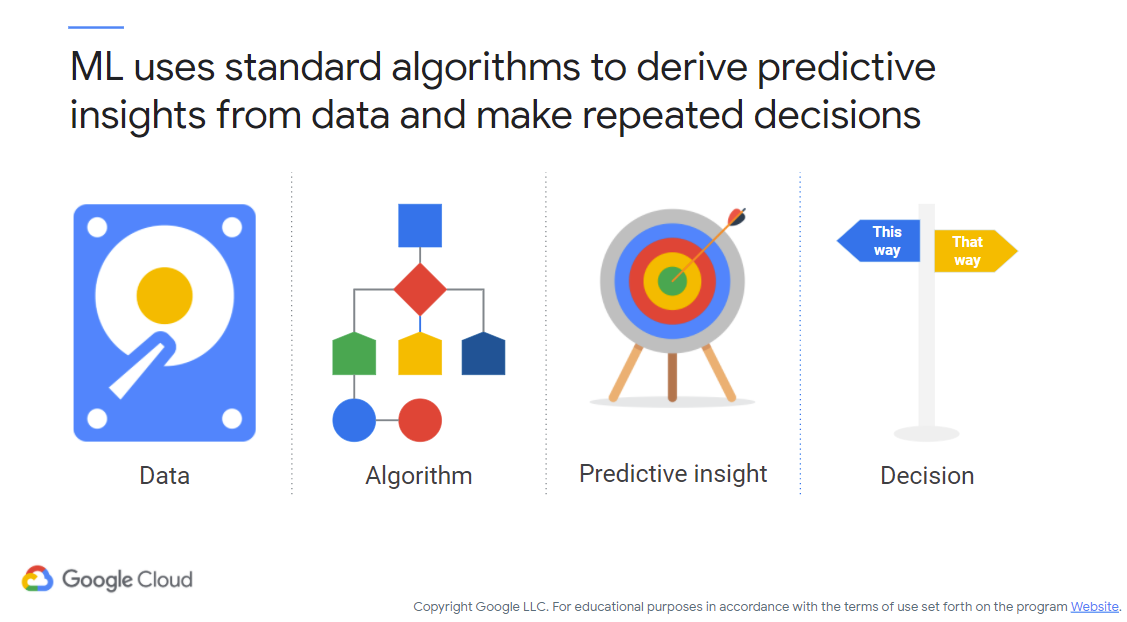

ML is a way to get predictive insights from data to make repeated decisions. You do this using algorithms that are relatively general and applicable to a wide variety of datasets.

Figure 1 shows where ML fits in with business analytics and decision making. Effectively, data gleaned from various sources can be acted upon (in various ways, depending on the needs of the business). Typically such activities would be done via data warehousing (see the section on BigQuery if you jumped ahead), however we can use (semi-)intelligent algorithms to go further.

Figure 1: ML and Decisions

Typically with ML, you develop the algorithm that learns how to make decisions rather than hard-coding a decision making strategy yourself. In this section we’ll be taking a look at how to do that. Here are a couple of quotes from Google on the topic:

Think of a typical company and how they use their data today. Perhaps they have a dashboard that business analysts and decision-makers view on a daily basis? Perhaps a report that they read on a monthly basis? That’s an example of backward-looking use of data – looking at historical data to create reports and dashboards. This is what people tend to mean when they talk about business intelligence. A lot of data analytics is backward-looking. Instead we will use ML to generate forward looking or predictive insights.

Of course, the point of looking at historical data might be to make decisions. Perhaps business analysts examine the data and suggest new policies or rules? They suggest, for example, that it might be possible to raise the price of a product in a certain region. Now, the business analyst is making a predictive insight, but is that scalable? Can the business analyst make such a decision for every product in every region? Can they dynamically adjust the price every second?

In order to make decisions around predictive insights repeatable, you need ML. You need a computer program deriving such insights. So, ML is about making many predictive decisions from data. It’s about scaling up business intelligence and decision making.

Figure 2 (c/o Google) demonstrates some of the other typical examples of how ML can be applied. Note: it doesn’t need to simply be for business decisions! We can use it for everyday tasks such as mapping routes or picking what movie to watch.

Figure 2: ML Examples

The general concept with ML is that you typically develop and train a model that is intended to make decisions about, well, something. The tricky thing with ML are that (1) models can be quite difficult, (2) your decisions are only as good as the data you train the model on, and (3) automated tools can make our lives much easier.

NOTE: One thing to really consider when performing ML is that you really need to understand what it is doing! A simple example would be a video recommendation algorithm gone awry – imagine a child watching a series of age-appropriate videos only to be recommended something graphic (i.e., way beyond what they’re ready for) as a result of a poorly-trained/defined model. Treat ML as you would treat any other program – it must be demonstratably and verifiably correct.

I’ve mentioned a model several times now, but what is a model? Generally, you’ll have some concept of how to perform a task, whether it is taxes/mapping as in the prior figure, or making decisions based on meta-data tagged on videos you’ve previously watched. What a model does is learn based on historical data (more or less – some models don’t necessarily need a lot of historical data) and then make decisions based on what has been previously interpreted.

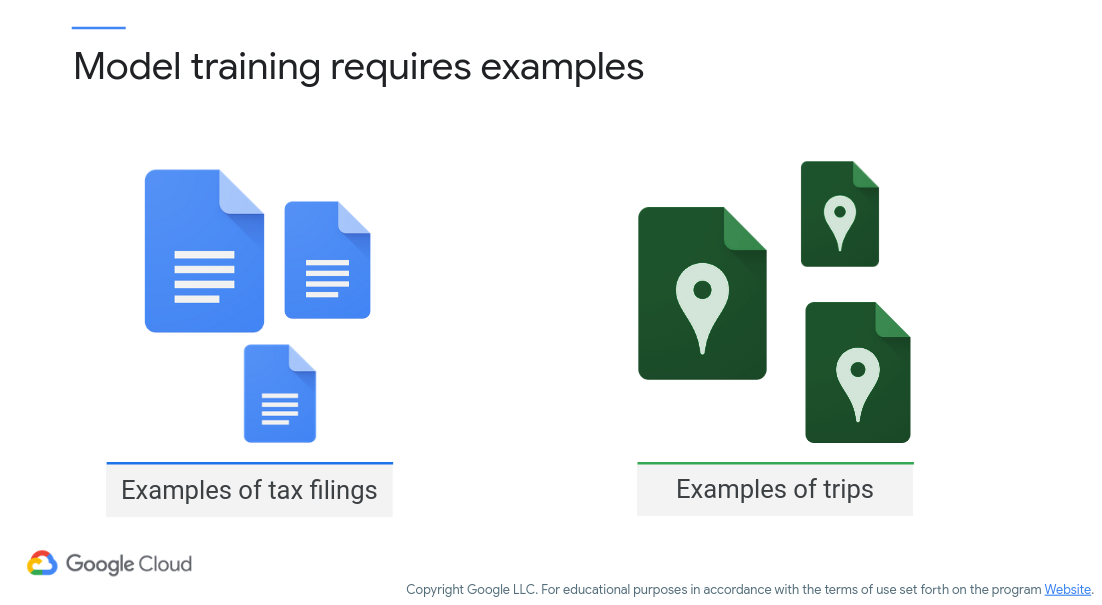

Let’s walk through a typical ML strategy: training and testing. The simplest example trains a model with a large set of the dataset that is available, and then the model is tested with the remaining dataset. In this approach, we can generally tell if a decision made during the testing phase is correct or not, and if the decisions were incorrect they can be fed back into the process for “fixing” our model.

Figure 3 (c/o Google) shows two simple examples of this concept (i.e., training a model). If you want to make tax-related decisions, you will need historical tax information. The same goes for map-related problems (fasted trip time, etc.). You’ll need historical data to know what has worked in the past. Effectively, the model learns prior successful strategies and applies that knowledge to future decisions.

Figure 3: ML Training Examples

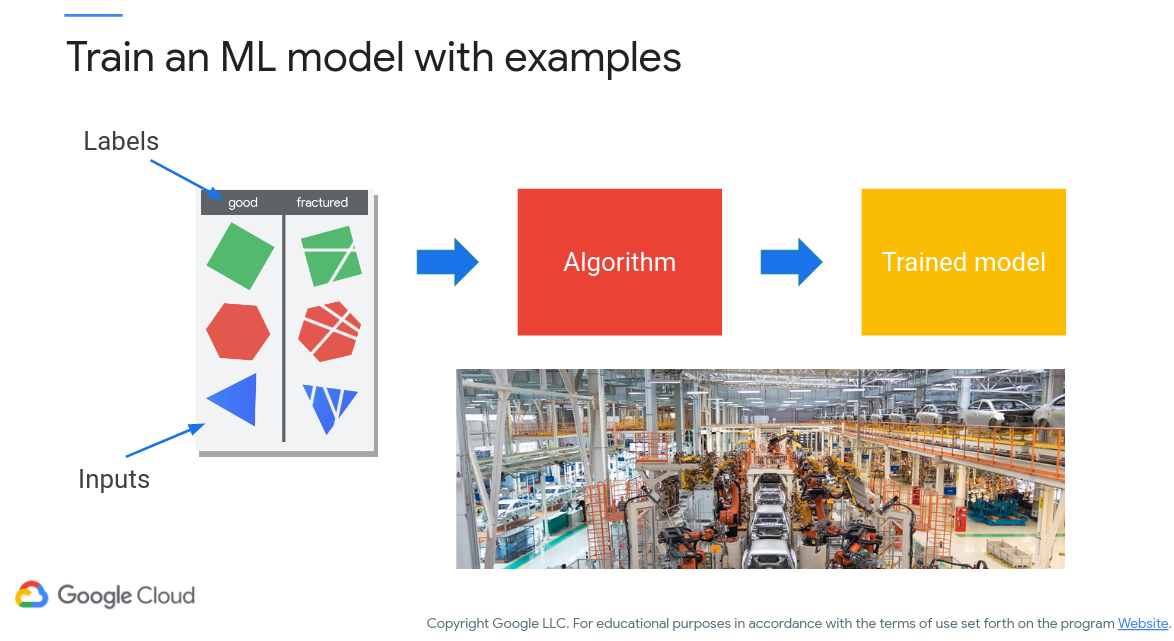

Figure 4 (c/o Google) shows an example in a bit more depth.

Figure 4: ML Training Examples (2)

The examples shown above demonstrate ML at a high level. There is an input and the correct answer for that input, or the label. Effectively, we are looking to “label” a series of inputs so that the ML model can make extrapolated decisions in the future, based on what it knows of correctly-labeled input. Figure 4 above shows how a set of images might be labeled as “good” or “fractured” based on the content inside. An ML model might then learn what it means to be “good” or “fractured” and then attempt to label new images/inputs in a similar fashion.

“Standard” Algorithm Use Cases

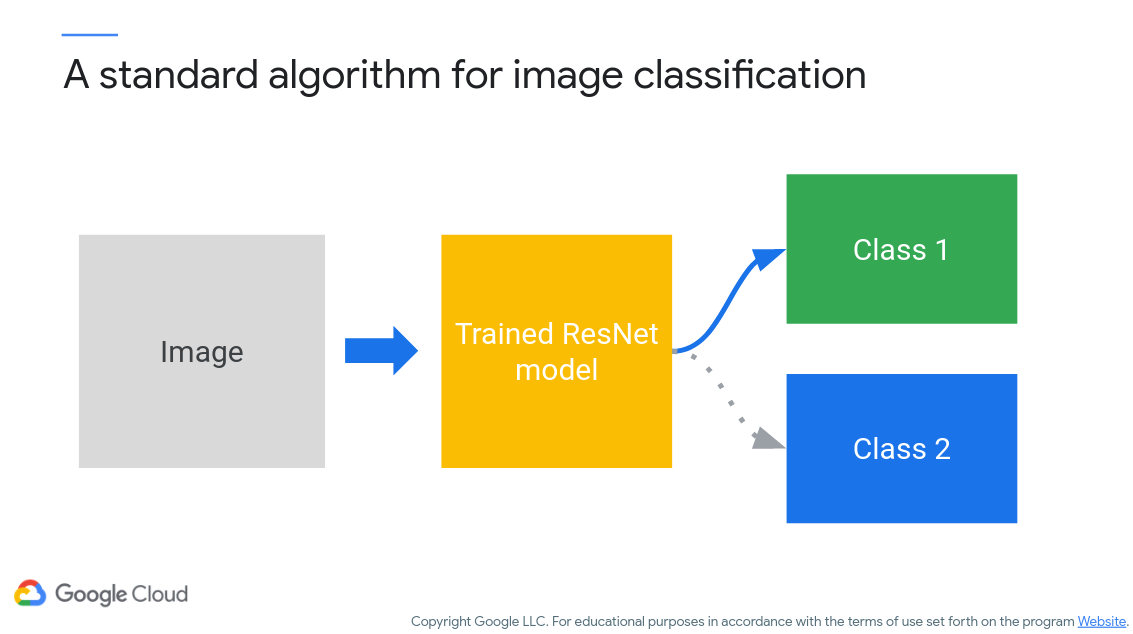

One interesting point about ML algorithms is that they tend to exist outside of use cases. For instance, image classification tasks use the same algorithm whether they are detecting “fragmented”/”good” images or checking for diseased leaves in nature. Here are a few of the common ML algorithms:

- Detecting a pattern in an image

- Predicting the future of a time series

- Understand/transcribe human speech and/or text

These tasks are considered common in the field of ML. Take Figure 5 (c/o Google) for instance. Here, you see the ResNet algorithm applied to an image classification task. The labels, or classes, are what we apply to the training set so that the ML algorithm can accurately predict future classes. Note that ResNet is one common image classification algorithm – regardless of the types of images we want classified ResNet should work just fine. Effectively, if we want multiple trained models, we simply need to provide an algorithm like ResNet with multiple training sets (i.e., each trained model will make different predictions).

Figure 5: ResNet Classification

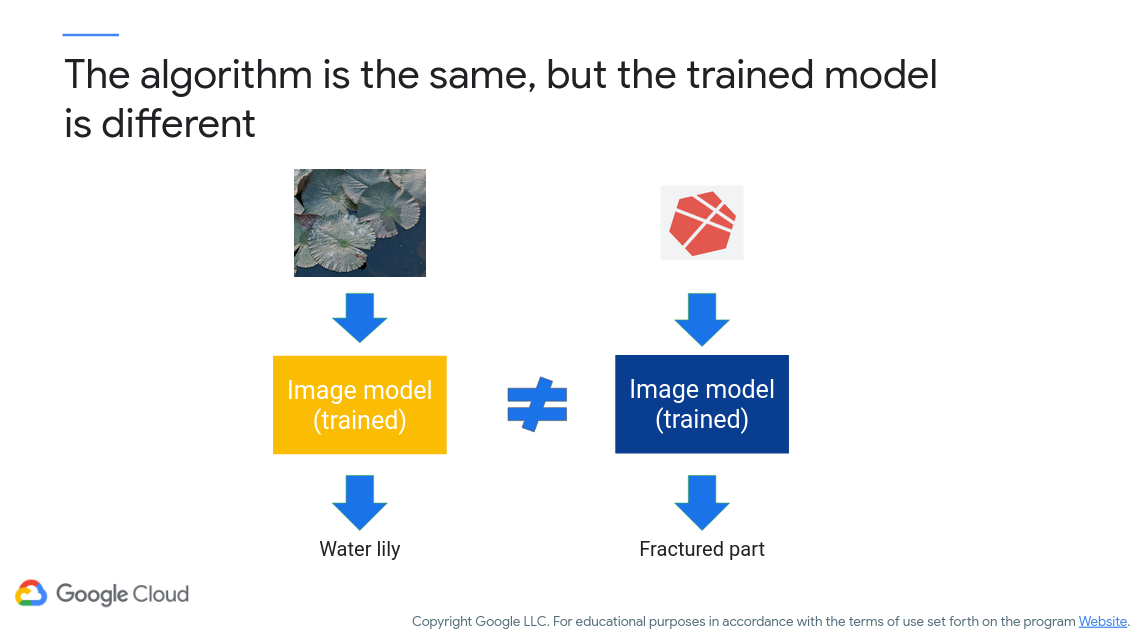

Figure 6 demonstrates how different training datasets can result in different models with the same algorithm. Effectively, the algorithm itself is generic and is a set of functions that learns how to distinguish differences from inputs based on labeled training information. The really nice thing here is that the strategies you implement are reusable from domain to domain. However, a different training session must occur for each domain – you can’t reuse trained models that don’t make sense – e.g., you wouldn’t use a “good/fractured” trained model to look for tumors in human cells!

Figure 6: Different Models, Same Algorithm

Another consideration for ML is that your models will only be as good as your training dataset. Take the perspective that you are training a child to recognize ideal behaviors in as many situations as possible (he said expecting everybody to understand the trials and tribulations of parenting…⚆_⚆). That child will not understand how to react if presented with an unexpected situation, the same can be said for a trained model with insufficient data. For example, let’s say you’re training an image classifier to find tumors in X-ray images. If you don’t train it with enough examples of what the various types of tumors look like, including orientation and size, there is a good chance it will either miss or misidentify in the test sets.

On the flipside, it is also possible to overtrain a model, meaning that the model only looks for very specific instances based on what it has learned from training. Both extremes are concerns with respect to training models. Note that we are not going into bias within ML models as well – that is a massive concern better handled by a deep dive into ML.

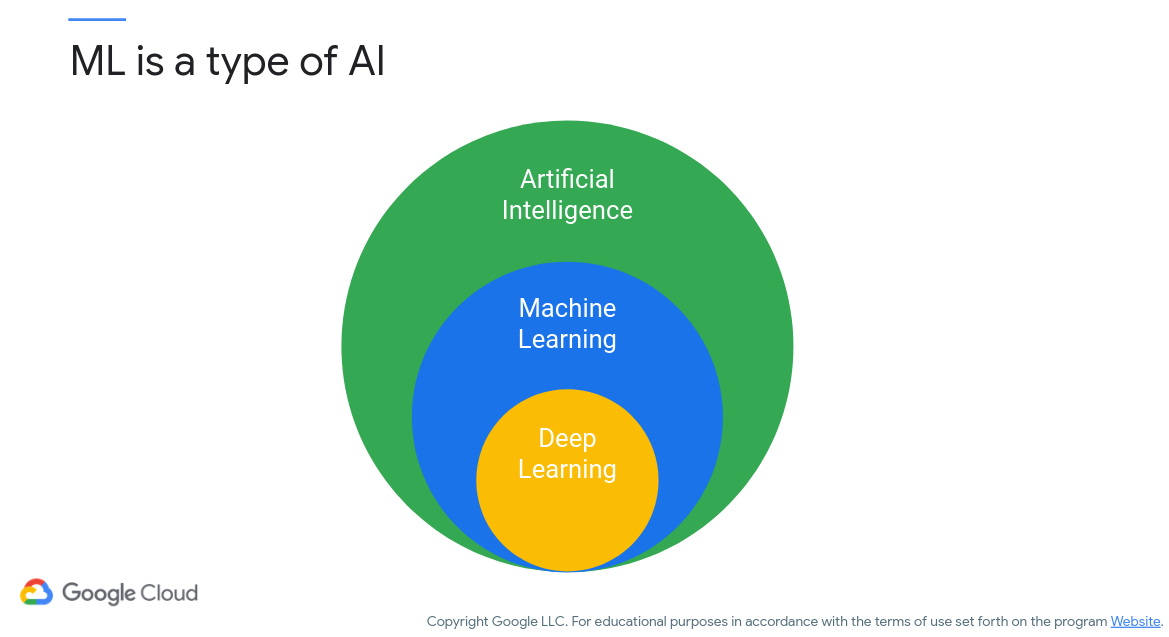

Where does Machine Learning Fit?

An interesting question might be how ML fits in with other topics such as artificial intelligence and deep learning (otherwise known as two of the big other “decision-making” buzzwords out there right now). Effectively, ML sits in between as a toolset used by the field of artificial intelligence (i.e., used to make decisions) and uses deep learning techniques (i.e., generally some form of neural networking) to form those patterns used to create models. Each of those topics are entire fields/studies in and of themselves, however keep in mind that the benefits start to appear at scale – ML techniques really shine with a lot of data and a lot of decisions to be made (i.e., don’t create an ML model to solve a single problem at one particular location, that is then a waste of time, money, and effort).

Figure 7: What is ML

One of the really great things about the field of ML is that the barriers of entry are significantly lower than they were in prior years. Consider the massive amount of data available that can be used in the training of ML models (e.g., geographic information, medical imaging, etc.) along with the maturing of the ML field (i.e., algorithms such as ResNet have garnered wide acceptance and scrutiny), as well as the availability of open-source tools for performing ML tasks (e.g., TensorFlow). ML can be performed by anybody with the available tools and resources now; you aren’t limited to a massively-funded research program. We will be looking at a few examples in a little bit to get you some experience.

MASSIVE DISCLAIMER: Just because you can use ML doesn’t mean that you should without understanding it! ML models are being used to make safety-critical decisions (e.g., autonomous driving) that can have an impact on life and limb. Don’t make the assumption that just because a model is “trained” that it is perfect. A model must be thoroughly analyzed for correctness of behavior in as many situations as possible.

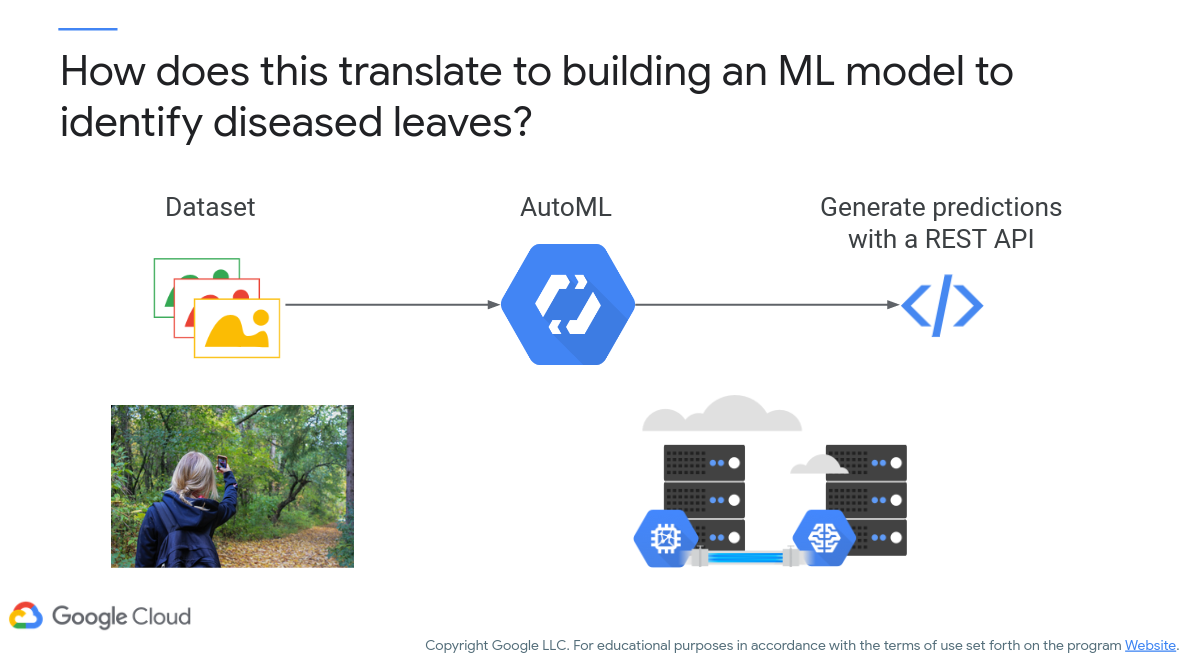

Let’s take a real-world example of ML. Let’s say you live in an area with a lot of trees. You notice that some leaves are beginning to look diseased and you’re worried about it spreading to other trees in the area (oddly enough, this would have been nice at an old place I lived at where the maple trees were acquiring some sort of disease). Here, you could take a lot of pictures with your camera on your phone of as many leaves as possible and feed that into an ML algorithm. Since we’re using the cloud, we’ll upload those images to Google Cloud and let [AutoML]https://cloud.google.com/automl) take care of the processing for us. Figure 8 (c/o Google) shows this process:

Figure 8: ML Example with AutoML

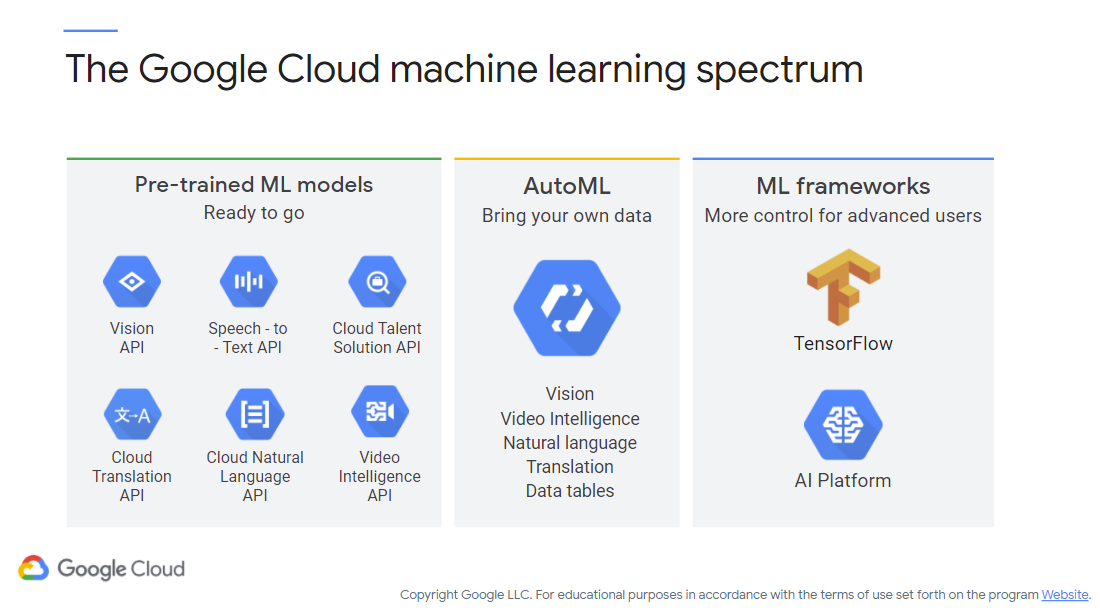

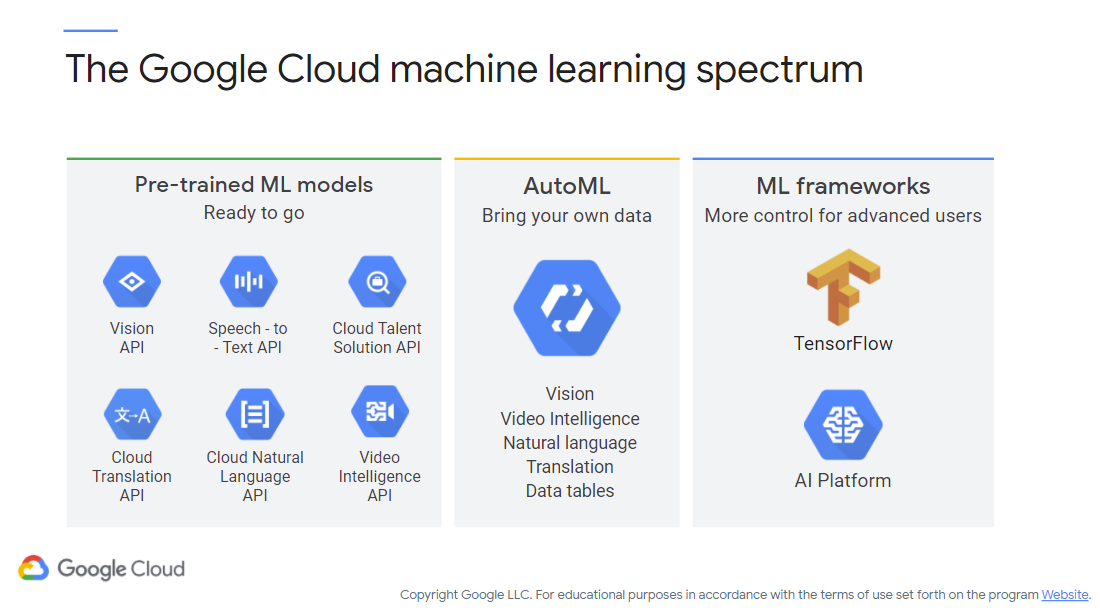

Here we leave the “magic” of the classification algorithm to AutoML, but the algorithm will be similar as described before. Label images, feed into algorithm, generate predictions (though this time as a RESTful API). Rinse, repeat. Figure 9 (c/o Google) shows you some of the different options that you have within the Google ecosystem – ML models and options will vary from provider to provider. Amazon has similar tools with AutoGluon, SageMaker, and Lambda.

Figure 9: Google Cloud ML Options

Here, you see that you have multiple options for running ML on your applications. You can use a pre-trained model (Vision API, Cloud Translation API, etc.). Basically, Google has already created (and is constantly updating) models for you to directly roll into your applications. This type of model would be best for somebody who only wants to use ML in their application without going deep into how to develop it – perhaps you want to replace user input or provide a better user experience. Note that, with this approach, you don’t need to provide a dataset for training (they’re pre-trained).

If you have data you want to bring to the fold, AutoML is an approach for leveraging pre-existing models that need to be trained. This type of approach would be good if you have a particular business need for ML, but still would rather use Google’s technologies and knowledge base.

On the other end you have customizable ML frameworks (e.g., TensorFlow, Vertex AI) that allow you to build your own ML algorithms and train them with your own data. This type of approach gives you full control over the entire process while still leveraging the cloud infrastructure.

Here is a delightful example of ML that attempts to recognize what you are doodling. It is fun and a nice break from this long and lengthy post, eh?

One thing to consider with such a tool/game is that each figure you draw is added to the database of shapes that the algorithm has seen. Something to consider when you play with online tools! Anyway, without going too deep into ML (again, that’s a separate class), here is what the Quick, Draw! game looks like behind the scenes (c/o Google):

Figure 10: Quick, Draw! Algorithm

This is a stylized version of a deep neural network that builds up layers and connections to discern the difference between objects (a dog and a cat shown). By following the connections from input to output, the algorithm arrives at a decision (it may not be the correct one). One of the labs you’ll be doing will build up an image recognition model.

Now, let’s play around with a TensorFlow model. This tool gets you thinking of how an ML algorithm makes decisions. You can play with the amount of training data and a few other options, set the features that the algorithm will use to label the output, and how many layers/neurons per layer there are. You don’t necessarily need to understand a lot of the detailed technical jargon at this point – note it is mainly if you want to start developing your own models. However, I’d recommend this page (from IBM) for a quick overview on neural networks.

Bespoke ML Models with Vertex AI/AI Platform (i.e., fully flexible yet more difficult)

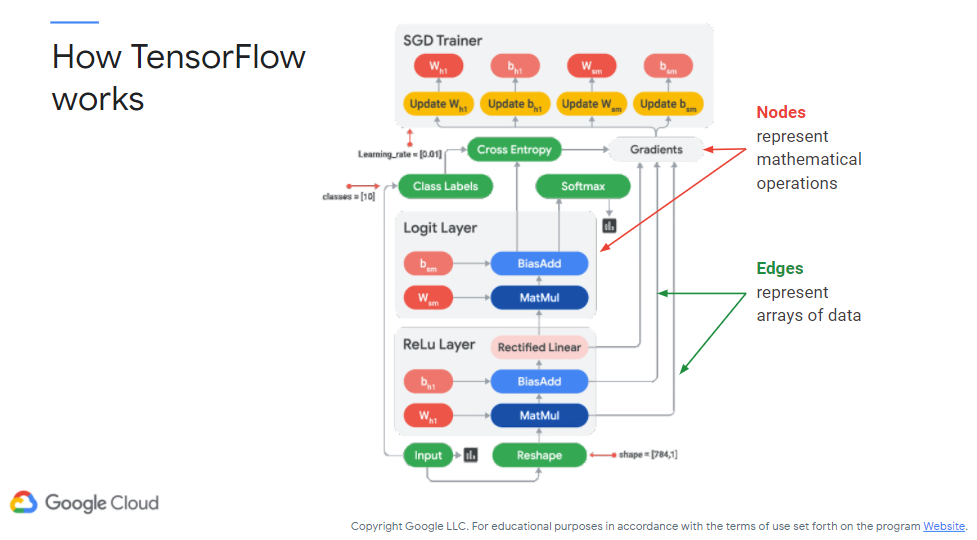

Warning: this section discusses TensorFlow - an open-source library (in Python) for numerical computation (not strictly ML). Figure 11 (c/o Google) shows how it works internally. There is a lot to unpack here that we won’t be going deeply into, however effectively you’ll be creating directed acyclic graphs (DAG) with TensorFlow. These graphs are not cyclical and have flow direction, so effectively your DAGs will be used to make decisions. In this figure the nodes represent mathematical operations, including “simple” operations like addition and “complex” operations like matrix multiplication. Edges in between nodes represent data transfer, effectively, how data moves from node to node (to be transformed).

Figure 11: TensorFlow

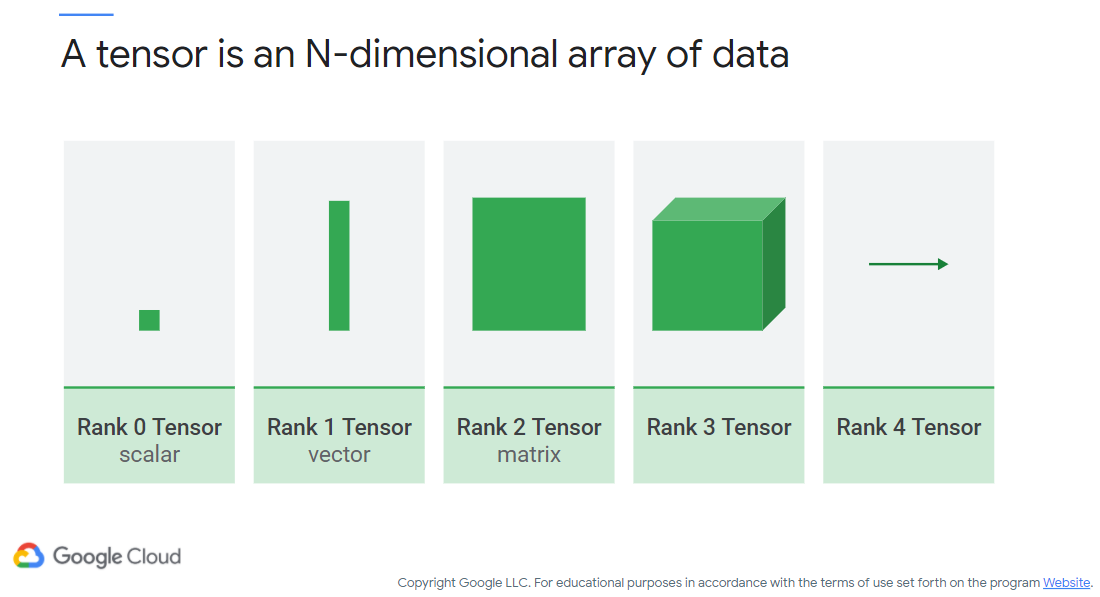

The following figure (c/o Google) shows what a Tensor actually is – an N-dimensional array of data. Based on the needs of your application you define which dimensionality is required: from a 1-D scalar (singular value) to a 2-D matrix (array of arrays, list of lists, etc.), to the number of dimensions required.

Figure 12: TensorFlow Data

One of the nifty concepts with TensorFlow is that the graphs you build can be ported from device to device. You could, for instance, train your model in a cloud-based environment and then run the model on live data from your phone. You essentially port your DAG (created in Python and stored as a SavedModel) to whichever language runs natively on your device of choice. Keras is another option for you to develop ML models (using deep neural networks) if you would prefer another strategy. (If interested - here is a breakdown of Keras vs. TensorFlow).

When dealing with ML we also need to be concerned with the amount of data that we are managing. As stated before, you need a lot of data for a good training procedure, meaning that there is a high probability it won’t fit into memory. For instance, common ML frameworks in Python, R, etc. will use packages that support in-memory datasets – meaning that once you leave the realm of RAM you’re out of luck (without extension). That means we need to batch our data. Effectively, you split up your data into batches and train per-batch. We also will need to consider distribution, where training is scaled-out. If you scale-up (get a bigger machine, more GPUs, etc.) you won’t necessarily find a great advantage – more power lies in more machines than absurdly-expensive single machines.

You also will want to try and avoid sampling data to get into the sweet spot of in-memory processing. By sampling you are actively discarding valuable inputs, meaning that your model will most likely be missing important information! You also may wish to consider pre-processing your input datasets and creating features manually to help your algorithm. Such activities can include scaling data, encoding data, etc. to make batch/distributed processing far more tenable.

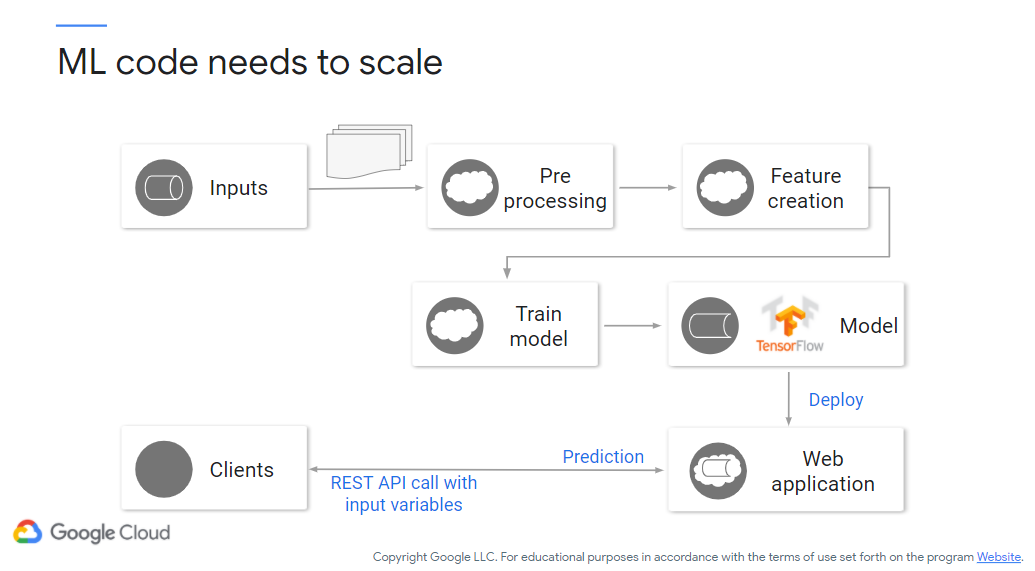

Figure 13 (c/o Google) shows how we can scale our ML activities using cloud processing. Assuming we are able to get a dataset, pre-process it, and perform our training activities (all either locally or cloud-based), we’ll then naturally want to deploy it ot be useable. Training is one problem, using it is another! We now need to consider the number of prediction queries per second we’ll need (i.e., how many users making how many calls and expecting a certain response per second). Also, what happens when the model needs to change – how do we re-deploy it seamlessly? From Google Cloud (at least) we can invoke TensorFlow as we would any other cloud resource. Note that re-training may need to occur as well, and if we have properly deployed our activities to the cloud we should also be able to seamlessly re-train as necessary.

Figure 13: Scaling ML

Vertex AI / AI Platform

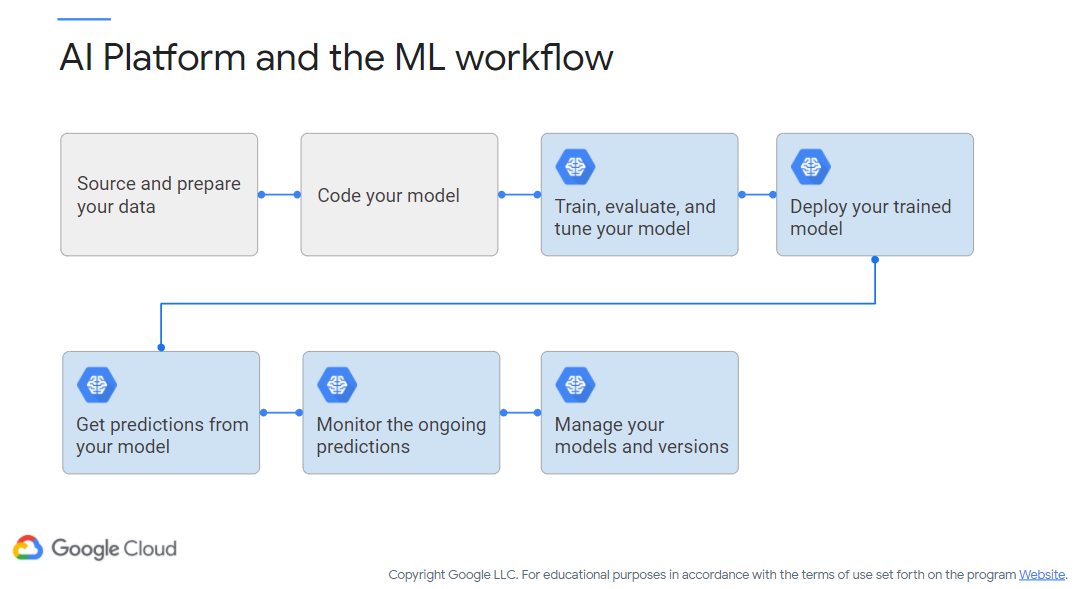

I keep using the word “seamlessly” to describe ML in the cloud. From Google’s perspective, the AI Platform is the service we’ll use to support that. It can distribute pre-processing activities, train the model, and deploy the trained model to your desired service (via RESTful API calls). Figure 14 (c/o Google) shows where the AI Platform (note the icon) falls in the general ML workflow.

Figure 14: AI Platform Workflow

Interestingly, AI Platform is in the process of being deprecated. It is still available to use, however it is being moved into Vertex AI.

Next, we’ll go through a demo on both a Vertex AI and an AI Platform codelab. Definitely check it out if you can!

There are some metrics in these videos that we haven’t touched on yet, but they are useful for determining how well your AI/ML algorithms perform. This article from TowardsDataScience is very helpful in explaining the particular metrics you’ll care about (including true/false positives/negatives, AUC, ROC, precision/recall, etc.).

- Module video: Vertex AI Chatbot demo [12:27]

- Module video: AI Labs [14:34]

- Module video: (Soon deprecated!) AI Platform Demo (1/2) [14:53]

- Module video: (Soon deprecated!) AI Platform Demo (2/2) [13:55]

- Codelab: AI Platform + Kaggle

- Kaggle (Data Science Community)

AutoML (i.e., less flexible but easier)

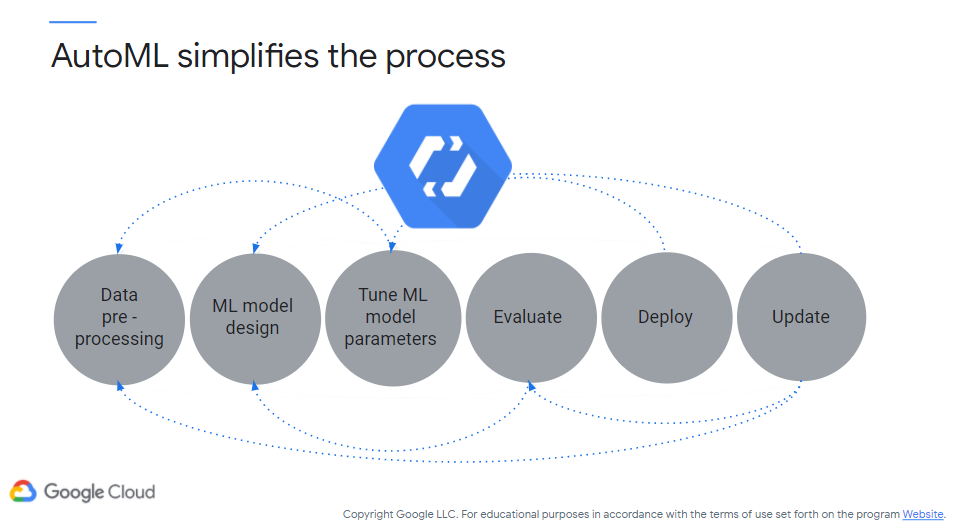

Ok, that was the heavy lifting where you have full control over every step of the process. Time now to let Google do some of the heavy lifting with AutoML. The core takeaway with AutoML is that there is no requirement to develop a model or have a training/serving infrastructure in place – Google handles that. Also, in theory, you require far less training data as well. You are basically using Google’s prior knowledge for common tasks to support your business needs. Figure 15 (c/o Google) shows the overall simplification of the ML process.

Figure 15: AutoML Process

AutoML has some pre-baked models that you can use for your applications:

- Vision - upload your images to Google and train models based on your needs.

- Video Intelligence - classify/track objects in uploaded videos.

- Natural Language - classify/extract/detect sentiment in text (based on your own domain-specific keywords/phrases).

- Translation - train a custom model based on provided language pairs.

- Tables - perform ML on structured (tabular) data.

Codelab - Train and Deploy On-Device Image Classification Model with AutoML Vision in ML Kit

Pre-Trained ML APIs (i.e., Google does all the work for you)

Google (plus other providers) also give you pre-trained ML models (accessible via an API) to perform common tasks so that you don’t have to worry about training or developing the model itself.

The downside, while hopefully obvious, is that you have 0 control over the fidelity of the model.

Here are some of the pre-trained models available for use:

- Vision API

- Text-to-Speech / Speech-to-Text API

- Cloud Translation API

- Natural Language API

- Video Intelligence API

Here is a demo video walking through Speech to Text and Cloud Shell!

- Module video: Speech to Text API Demo [11:42] and Associated Codelab

For some more fun, here are a pair of demos using the Qwiklabs of looking at a very similar demo (Speech to Text) as well as Vertex AI for some image generation

- Module video Speech to Text / Vertex AI Qwiklabs [14:33] and associated Qwiklabs Speech-to-Text API: Qwik Start, Build an AI Image Generator app using Imagen on Vertex AI .

We will leave the details to each of their linked pages, however they all boil down to “call an API to perform the titled action.”

Here is a short video from Bloomberg/Google on how they use Cloud Translation API to support their business activities: Bloomberg uses Google Translate to share breaking news with the world (1:50)

In case if you want more practice:

QwikLabs - Cloud Natural Language API: Qwik Start (GSP097) [1 credit]

Also, Generative AI

I needed to include this as it is bleeding-edge new, but want to preface that I have never played with this technology myself. If you want to try your hand at Generative AI / LLMs, here you go: Generative AI Overview.

Fun stuff! The assignments are in Blackboard for you to go through!

Additional Resources

ResNetalgorithm- AutoGluon, SageMaker, and Lambda

- Quick, Draw! - Google

- TowardsDataScience - ML Metrics

- Vision API

- Text-to-Speech

- Speech-to-Text API

- Cloud Translation API

- Natural Language API

- Video Intelligence API

- Generative AI Overview

- Getting started with ML: 25+ resources recommended by role and task

Where noted, the original content was provided by Google LLC and modified for the purpose of the course, without input or endorsement from Google LLC.